AWS Scaling - The ELB Surge Queue

til | aws | elb | scaling | cloud

During large, sudden bursts of traffic, AWS Elastic Load Balancers (ELB) take time to spin up new compute in order to handle the sudden increase in load.

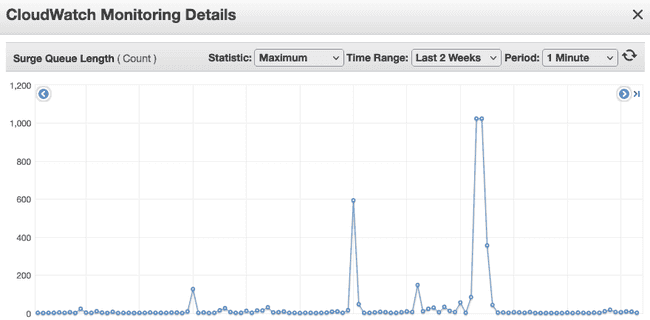

During this time, if the ELB is unable to service all incoming requests, they will be pushed on to the ELB's surge queue.

This surge queue has a maximum length of 1024 requests, and this length cannot be configured.

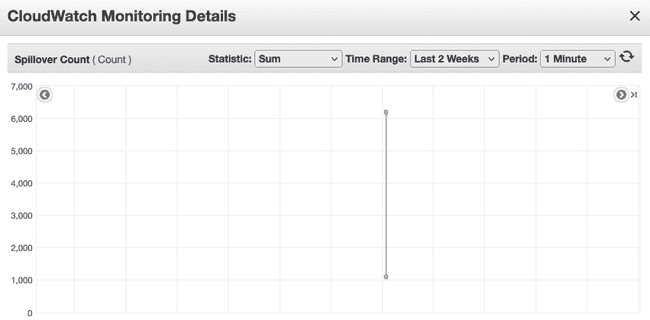

If this surge queue fills, additional requests to the ELB will fail in what's known as surge queue "spillover". Spillover is any request that couldn't be queued on the ELB surge queue due to it being full.

When the surge queue fills, there is "spillover" and requests will fail.

When requests start to spillover, the ELB will begin to return 502 or 504 responses. As such, it's a good idea to have automated monitoring in place on the spillover metrics.

This issue will typically mitigate itself after the ELBs have scaled up.

We can prevent this by "pre-warming" the ELB, to ensure it is scaled appropriately in advance of the surge. This pre-warming may require contacting AWS support.

Copyright © 2022 Darren Burns